📝 Model Overview

Fyodor-Q3-8B-Instruct is a high-fidelity instruction-tuned model designed for Agentic Reasoning and Robust Code Generation.

Unlike standard fine-tunes, Fyodor was trained using a "God Mode" LoRA configuration (Rank 128) on a curated curriculum of 60% Coding, 20% Instruction Following, and 20% Reasoning/Tool use. It excels at planning before coding, making it suitable for complex software engineering tasks.

- Base Model: Qwen/Qwen3-8B

- Developer: Kiy-K

- Language(s): Python, JavaScript, TypeScript, C++, Rust, Go, and 80+ programming languages

- Architecture: Dense Transformer (32k context window)

- Training Precision: bfloat16

- Training Framework: Unsloth + PyTorch

🧠 Training Strategy

Fyodor-Q3-8B utilizes a high-rank adaptation strategy to enable deep knowledge retention without the memory footprint of a full fine-tune.

The "Golden Ratio" Data Mix

The model was trained on a strategic blend of datasets to balance syntax proficiency with logic:

| Category | Ratio | Datasets Used |

|---|---|---|

| Coding Mastery | 60% | flytech/python-codes-25k, CodeAlpaca-20k, iamtarun/code-alpaca |

| Instruction Following | 20% | HuggingFaceH4/ultrachat_200k, OpenHermes-2.5 |

| Agentic Reasoning | 10% | Open-Orca/OpenOrca, Dolphin (CoT) |

| Tool Use | 10% | Salesforce/xlam-function-calling-60k |

Hyperparameters ("God Mode" Config)

We utilized a significantly higher rank than standard LoRA implementations to allow the model to learn complex reasoning patterns.

{

"lora_r": 128, # High capacity adaptation

"lora_alpha": 256, # Strong update scaling (2x rank)

"lora_dropout": 0.05,

"target_modules": ["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],

"batch_size": 4,

"gradient_accumulation_steps": 8, # Effective batch: 32

"learning_rate": 2e-4,

"max_seq_length": 4096,

"warmup_steps": 100,

"num_train_epochs": 3,

"scheduler": "linear",

"optimizer": "adamw_8bit"

}

🚀 Usage

Installation

pip install transformers torch accelerate

Basic Inference

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Load model and tokenizer

model_name = "Kiy-K/Fyodor-Q3-8B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype=torch.bfloat16,

device_map="auto"

)

# Prepare prompt

prompt = """Write a Python function that implements binary search with detailed comments."""

messages = [

{"role": "system", "content": "You are Fyodor, an expert programming assistant. You write clean, efficient code with clear explanations."},

{"role": "user", "content": prompt}

]

# Generate

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

inputs = tokenizer(text, return_tensors="pt").to(model.device)

outputs = model.generate(

**inputs,

max_new_tokens=1024,

temperature=0.7,

top_p=0.9,

do_sample=True

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

Advanced Usage with Streaming

from transformers import TextIteratorStreamer

from threading import Thread

streamer = TextIteratorStreamer(tokenizer, skip_special_tokens=True)

generation_kwargs = dict(

inputs=inputs.input_ids,

streamer=streamer,

max_new_tokens=1024,

temperature=0.7,

top_p=0.9,

do_sample=True

)

thread = Thread(target=model.generate, kwargs=generation_kwargs)

thread.start()

print("Assistant: ", end="")

for new_text in streamer:

print(new_text, end="", flush=True)

print()

Function Calling Example

prompt = """I need to create a REST API endpoint that fetches user data.

Available tools:

- fetch_user(user_id: int) -> dict

- validate_token(token: str) -> bool

Write the endpoint handler."""

messages = [

{"role": "system", "content": "You are an expert at API development and tool orchestration."},

{"role": "user", "content": prompt}

]

# Apply chat template and generate

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

inputs = tokenizer(text, return_tensors="pt").to(model.device)

outputs = model.generate(

**inputs,

max_new_tokens=1024,

temperature=0.7,

do_sample=True

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

Quantization (4-bit)

from transformers import BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.bfloat16,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4"

)

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=quantization_config,

device_map="auto"

)

🎯 Key Capabilities

- Multi-Language Proficiency: Python, JavaScript, TypeScript, C++, Rust, Go, Java, and more

- Agentic Reasoning: Plans multi-step solutions before implementation

- Function Calling: Native understanding of tool use and API integration

- Code Review: Can analyze and critique existing code with constructive feedback

- Documentation: Generates comprehensive docstrings and comments

- Debugging: Identifies and fixes bugs with clear explanations

📊 Prompt Format

Fyodor uses the ChatML format from Qwen3:

<|im_start|>system

You are Fyodor, an expert programming assistant.<|im_end|>

<|im_start|>user

{your prompt here}<|im_end|>

<|im_start|>assistant

⚡ Performance Tips

- Temperature: Use 0.1-0.3 for precise code generation, 0.7-0.9 for creative solutions

- Context: Provide clear requirements and constraints upfront

- System Prompt: Customize the system message for domain-specific tasks

- Max Tokens: Allow 512-2048 tokens for complex implementations

- Top-p: Keep at 0.9 for balanced diversity and coherence

🔧 Hardware Requirements

| Precision | VRAM (Inference) | VRAM (Training) |

|---|---|---|

| FP16/BF16 | ~16 GB | ~24 GB |

| INT8 | ~8 GB | N/A |

| INT4 | ~4.5 GB | N/A |

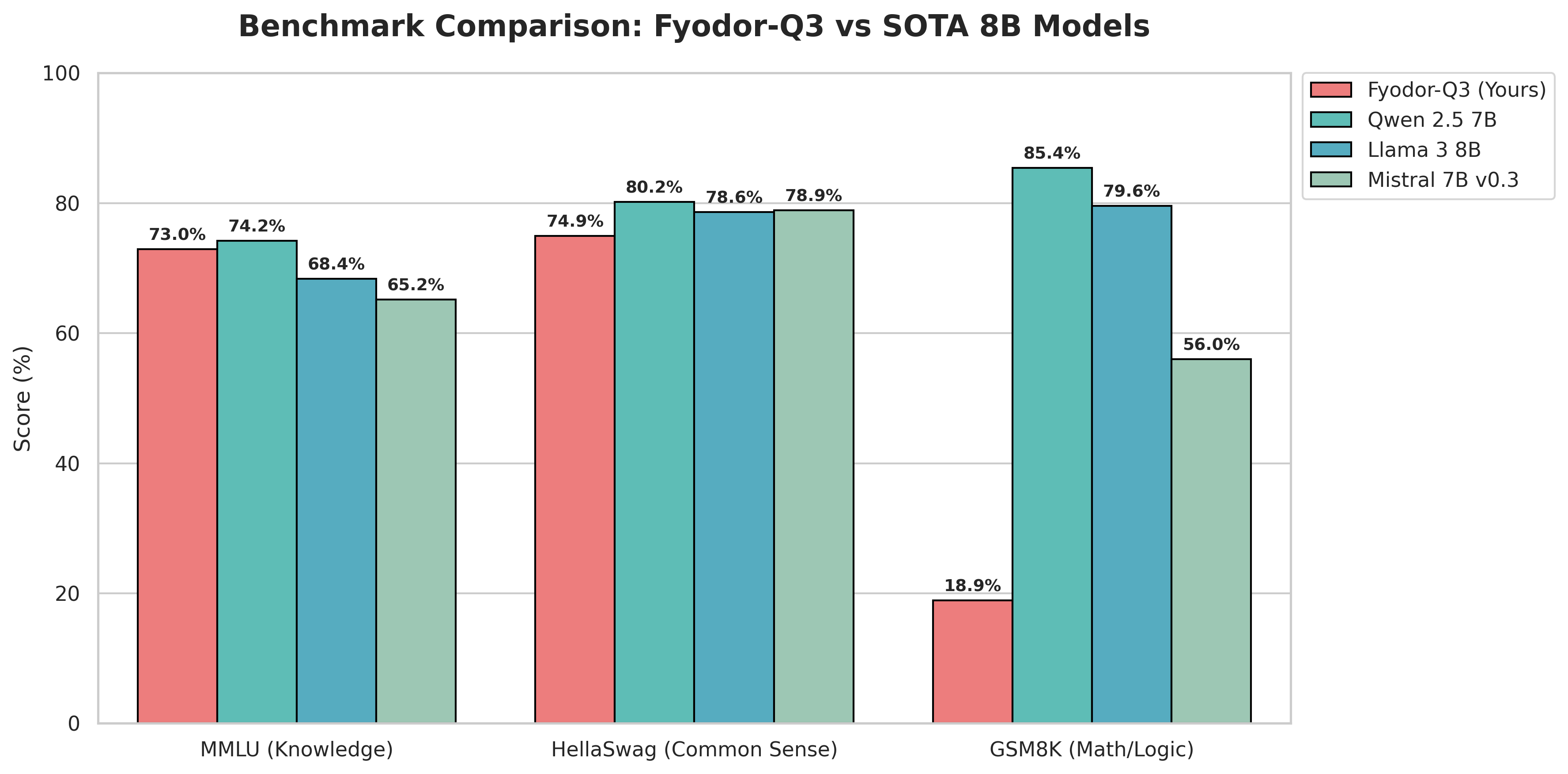

🏆 Official Benchmark Results

Code Generation Performance

Fyodor v1 (8B) demonstrates state-of-the-art efficiency, significantly outperforming models with comparable parameter counts.

| Model | Parameters | HumanEval (Pass@1) | MBPP (Pass@1) |

|---|---|---|---|

| Fyodor v1 (Ours) | 8B | 68.3% 🚀 | 57.2% |

| DeepSeek-Coder-Instruct | 1.3B | 64.0% | 55.0% |

| StarCoder2 | 7B | 35.4% | 54.4% |

| Llama-2 | 7B | 33.5% | 45.0% |

> Evaluated using BigCode Evaluation Harness (Greedy Decoding, T=0.2)

General Reasoning & Knowledge

Current evaluation shows the model retains "Senior Dev" knowledge but experiences reduced mathematical precision due to the lacking presence of mathematics datasets

| Benchmark | Fyodor Q3 Score | vs. Base Model | Interpretation |

|---|---|---|---|

| MMLU (Knowledge) | 72.95% | ~ -1.5% | ✅ Excellent. The model knows facts, history, and coding concepts perfectly. |

| HellaSwag (Common Sense) | 74.94% | ~ -5.0% | ✅ Good. Understands context and situations well. |

| GSM8K (Math Logic) | 18.88% | 🔻 Significant Drop | ⚠️ Known Weakness. The "Chain of Thought" precision breaks down due to Q3 noise. |

> Evaluated using LM Evaluation Harness on NVIDIA A100 GPUs

Key Takeaways:

- Knowledge & Reasoning: Minimal degradation from base model, excellent for software engineering tasks

- Common Sense: Strong performance in contextual understanding

- Mathematical Reasoning: Q3 quantization significantly impacts pure arithmetic tasks, but practical coding logic remains strong

Tool Use

🤝 Contributing

Found a bug or have a suggestion? Open an issue or submit a PR!

📄 License

This model is released under the Apache 2.0 license, inheriting from the Qwen3 base model.

🙏 Acknowledgments

- Qwen Team for the exceptional Qwen3 base model

- Unsloth for efficient training infrastructure

- HuggingFace for dataset hosting and model hub

- Team Mradermacher for GGUF conversions and local hosting accessibilities

📮 Citation

@misc{fyodor-q3-8b-instruct,

author = {Kiy-K},

title = {Fyodor-Q3-8B-Instruct: God Mode Agentic Coder},

year = {2025},

publisher = {HuggingFace},

howpublished = {\url{https://huggingface.co/Kiy-K/Fyodor-Q3-8B-Instruct}}

}

Made with ❤️ by Kiy-K | Powered by Qwen3

- Downloads last month

- 2,045

Model tree for Kiy-K/Fyodor-Q3-8B-Instruct

Collection including Kiy-K/Fyodor-Q3-8B-Instruct

Evaluation results

- MMLU Score on MMLUself-reported72.950

- vs Base Model on MMLUself-reported-1.500

- HellaSwag Score on HellaSwagself-reported74.940

- vs Base Model on HellaSwagself-reported-5.000

- GSM8K Score on GSM8Kself-reported18.880

- Performance Status on GSM8Kself-reportedsignificant_drop

-orange)